1. Introduction

Why Normalization Matters in Deep Learning?

I’ve spent a lot of time training deep learning models, and one thing I’ve learned the hard way is that training instability is a silent killer.

You tweak hyperparameters, try different architectures, and still, the loss graph looks like a stock market crash.

Turns out, a lot of that chaos comes from internal covariate shift—a fancy way of saying that as training progresses, layer inputs keep changing unpredictably.

That’s where normalization techniques like Batch Normalization (BN) and Layer Normalization (LN) come in. They stabilize training, smooth out loss curves, and sometimes even act like a secret weapon for generalization.

But here’s the catch—not all normalization techniques work the same way. I’ve seen models perform brilliantly with BN and fail miserably with LN, and vice versa. That’s why choosing the right one matters.

Why is This Comparison Important?

There was a time when I thought Batch Normalization was the holy grail—it was everywhere in CNNs, and almost every state-of-the-art architecture used it. Then, I started working with NLP models, and suddenly, BN was useless. Layer Normalization took over, and it wasn’t just about preference—it was a necessity.

If you’ve ever trained a Transformer-based model, you know BN won’t cut it. If you’re working with CNNs, LN might not give you the same benefits. So, the real question isn’t “Which is better?” but “Which is better for your specific use case?”

Misconceptions About Normalization

I’ve come across quite a few misconceptions when discussing normalization with fellow data scientists. Let me clear up some of the biggest ones:

- “Batch Normalization is always better.” Nope. If you’re working with reinforcement learning or recurrent models, BN can actually hurt performance.

- “Layer Normalization is only useful in NLP.” Not true. LN shines in cases where batch statistics don’t make sense—like in small-batch training.

- “Normalization is just about accelerating training.” Sure, it speeds things up. But more importantly, it makes training possible for deep architectures that would otherwise struggle.

2. Understanding Batch Normalization

How BN Works Under the Hood?

Let’s break this down without getting lost in too much math. BN normalizes activations across the batch dimension—meaning, for each feature, it computes the mean and variance from the entire batch and then normalizes the values accordingly. This helps keep activations centered and scaled, preventing extreme values from throwing training off.

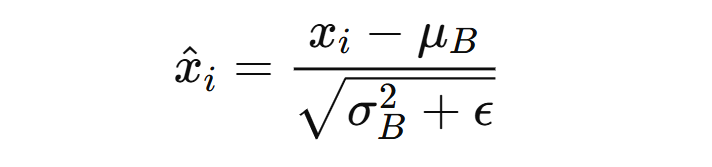

Mathematically, BN does this:

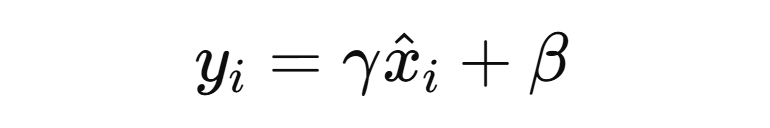

where μB and σB2 are the mean and variance computed per batch. Then, a learnable affine transformation (scale and shift) is applied:

This part is crucial—BN doesn’t just normalize and leave it there. It lets the model learn back the optimal scale and shift, which is why it works so well in practice.

Intuition Behind BN’s Functionality

Think of BN as a traffic cop for activations. Without it, gradients can explode or vanish, making training erratic. By normalizing at every step, BN keeps gradients smooth, preventing large jumps in parameter updates.

A practical way to see this in action: Try training a deep CNN without BN and watch your learning rate behave like a wild horse—it either moves too slow or too fast, with no in-between. Add BN, and suddenly, your loss curve looks much more predictable.

Advantages of Batch Normalization

✅ Smoother Loss Landscape

I’ve personally seen BN turn chaotic loss curves into smooth, converging ones. By keeping activations well-behaved, it makes optimization more stable.

✅ Faster Convergence

If you’ve trained deep networks, you know tuning the learning rate is an art. BN allows you to use higher learning rates without breaking training, which means faster convergence.

✅ Acts as Regularization

I used to rely heavily on dropout to prevent overfitting. But with BN, I noticed I could reduce or even remove dropout entirely, and the model still generalized well. That’s because BN adds noise to training (due to batch statistics changing every iteration), acting as implicit regularization.

✅ Helps Deeper Networks Train Efficiently

Before BN, training deep networks was a nightmare. BN lets you go deeper without worrying about vanishing/exploding gradients. That’s why almost every modern CNN architecture—from ResNets to EfficientNets—relies on it.

Limitations of Batch Normalization

🚨 Batch Dependency

I once tried using BN with small batch sizes, and the results were disappointing. That’s because BN relies on batch statistics—if your batch size is too small, these statistics become unreliable, leading to unstable training.

🚨 Inference-Time Inconsistency

During training, BN uses batch statistics (mean & variance of the batch). But during inference, it switches to running estimates (computed across training). This can sometimes lead to discrepancies—especially if batch statistics shift significantly over time.

🚨 Not Well-Suited for RNNs and Small Dataset Scenarios

This one’s crucial. If you’re working with sequential data (like LSTMs, GRUs), BN can hurt performance because batch statistics vary across time steps. That’s why RNN-based models almost never use BN.

3. Understanding Layer Normalization

How LN Works?

I remember the first time I tried Layer Normalization (LN)—I was working on an NLP model, and Batch Normalization just wasn’t cutting it. The model was highly sensitive to batch size, and training was inconsistent. That’s when I realized: BN depends on batch statistics, but what if my batch sizes are small or dynamically changing?

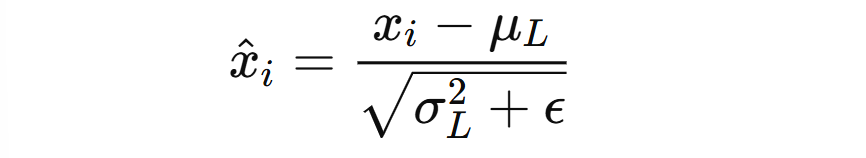

That’s exactly where LN shines. Unlike BN, which normalizes across the batch, LN normalizes across neurons within a single sample. Mathematically, for an input x with N neurons, LN computes:

where μL\mu_LμL and σL2\sigma_L^2σL2 are the mean and variance across the neurons of a single sample instead of a batch. Just like BN, it also applies learnable parameters γ\gammaγ (scale) and β\betaβ (shift) to maintain representational power.

Intuition Behind LN’s Functionality

You might be wondering: Why does LN work better for certain architectures?

Here’s how I see it—Batch Normalization adapts to the dataset’s overall distribution, but LN adapts to each individual input. This makes LN extremely useful when:

- Batch sizes are small or variable (like in reinforcement learning or online learning)

- Sequences matter, like in NLP and RNNs, where normalization across batch samples doesn’t make sense

- Inference needs consistency, since LN doesn’t rely on batch statistics that can shift over time

From my experience, once you move into NLP, LN becomes a necessity, not a choice. That’s why almost every Transformer-based model (BERT, GPT, T5) ditches BN in favor of LN.

Advantages of Layer Normalization

✅ Works Well for NLP and RNN-Based Architectures

I’ve personally seen LN outperform BN in Transformer-based models. Since NLP relies on sequences rather than independent samples, normalizing across neurons instead of batch samples makes a huge difference.

✅ Consistent Performance Regardless of Batch Size

This is a game-changer. With BN, performance can degrade if you change batch sizes. LN? Completely independent of batch size, which means training is much more stable.

✅ No Reliance on Batch Statistics → Ideal for Reinforcement Learning & Online Learning

I remember struggling with BN in reinforcement learning (RL) because in RL, batch statistics don’t mean much—the data is dynamic. LN solved this issue because it doesn’t rely on batch-level statistics at all.

Limitations of Layer Normalization

🚨 Computationally More Expensive Than BN

One thing I noticed early on—LN is more computationally demanding than BN because it operates per sample, rather than leveraging batch-level statistics. If you’re working with massive CNN architectures, this can slow things down.

🚨 May Not Always Generalize as Well as BN in CNNs

For computer vision tasks, I still prefer BN. Why? Because CNNs naturally benefit from spatial correlations, and BN helps preserve that structure better than LN. LN doesn’t always provide the same level of regularization that BN does in image models.

4. Key Differences: Batch Normalization vs. Layer Normalization

At this point, you might be thinking: “Okay, both BN and LN have their strengths, but when exactly should I use one over the other?”

Here’s a straight-to-the-point comparison based on my experience working with both:

| Feature | Batch Normalization (BN) | Layer Normalization (LN) |

|---|---|---|

| Normalization Scope | Across batch samples | Across neurons in a single sample |

| Works Well With | CNNs, Large batch sizes | RNNs, NLP, Small batch sizes |

| Dependence on Batch Size | Yes, highly dependent | No, completely independent |

| Effect on Training Stability | Reduces internal covariate shift | Reduces neuron-wise variance |

| Inference Consistency | Needs running mean & variance | No reliance on batch statistics |

| Additional Computational Cost | Less expensive (batch-level computation) | More expensive (per-sample computation) |

Final Thoughts on BN vs. LN

- If you’re working with CNNs → Stick to Batch Normalization

- If you’re working with NLP or RNNs → Layer Normalization is your best bet

- If batch size is small or variable → LN is the clear winner

- If you’re working in reinforcement learning or online learning → BN will cause issues; go for LN

I’ve personally faced scenarios where choosing the wrong normalization led to unstable training, slow convergence, or even complete failure to train. Hopefully, now you won’t have to learn that lesson the hard way.

5. Practical Use Cases & When to Use What?

At this point, you might be wondering: “Okay, I get how BN and LN work, but when exactly should I use one over the other?”

I’ve faced this exact question many times when building deep learning models. The truth is, there’s no universal winner—it depends entirely on the architecture and constraints you’re working with.

Let’s break it down.

When to Prefer Batch Normalization?

From my experience, BN is the go-to when working with CNNs and vision models. Here’s why:

✅ CNNs, ResNets, and Vision Models

I’ve used BN extensively in CNN-based architectures like ResNet, EfficientNet, and VGG. The reason? BN helps smooth out optimization, making training more stable even when using aggressive learning rates.

✅ When Batch Sizes Are Large

BN relies on batch statistics, so it performs best when you have large, consistent batch sizes. If you’re working with GPUs with high memory, BN is usually a safe bet.

✅ When Training Is Unstable Due to High Learning Rates

Ever had a model that just wouldn’t converge because of exploding gradients? BN can act as a stabilizer, allowing you to train with higher learning rates without diverging.

👉 Bottom Line: If you’re working with CNNs or large-batch scenarios, BN is the way to go.

When to Prefer Layer Normalization?

I’ve found that LN is indispensable when working with NLP models and small-batch training scenarios. Here’s where LN shines:

✅ NLP Models & Transformers (BERT, GPT, T5, etc.)

If you’ve worked with Transformers, you’ve probably noticed something—none of them use Batch Normalization. Instead, they all use LN. The reason? BN relies on batch statistics, which don’t work well for variable-length sequences.

✅ RNNs (LSTMs, GRUs) Where Batch Dependence Is a Problem

I once tried BN with an LSTM model and quickly learned my mistake—batch dependence completely ruins RNN performance. LN, on the other hand, normalizes each sequence independently, making it perfect for recurrent models.

✅ Small Batch Size Training Scenarios

This is a big one. If your batch size is small (either due to memory constraints or the nature of your data), BN will struggle because batch statistics become unreliable. LN doesn’t have this issue, making it a much better choice.

👉 Bottom Line: If you’re working with NLP, RNNs, or small batch sizes, LN is the better option.

6. Advanced Insights: Beyond the Basics

Now that we’ve covered the practical aspects, let’s go a level deeper. BN and LN aren’t the only normalization techniques—there are other approaches that extend the debate even further.

Group Normalization & Instance Normalization: Where Do They Fit?

You might be wondering: “What if I want the benefits of BN but can’t afford batch dependence?”

That’s where Group Normalization (GN) and Instance Normalization (IN) come in.

🔹 Group Normalization (GN) → Instead of normalizing over the batch, GN normalizes within small groups of channels. It works well for vision tasks when batch sizes are small (e.g., medical imaging or object detection models).

🔹 Instance Normalization (IN) → This is often used in style transfer and generative models. Unlike BN or LN, IN normalizes each feature map independently within a single image. This makes it great for tasks where you need per-instance normalization without affecting the style of the image.

👉 Key Takeaway: If BN or LN isn’t working, Group Norm (for vision) or Instance Norm (for style-based models) might be the answer.

Normalization in Transformers: Why Did LN Replace BN?

When I first started working with Transformer-based models, I was curious—why did they all abandon BN?

Here’s what I found:

📌 BN relies on batch statistics, which aren’t stable in NLP because sentences vary in length and structure.

📌 LN works per sample, ensuring consistent normalization even for dynamically changing input sequences.

📌 BN introduces batch dependence, making it hard to use for online learning or autoregressive models like GPT.

That’s why modern NLP architectures exclusively use LN, ensuring consistent and stable training across different input sizes.

Do We Even Need Normalization? The Rise of Normalization-Free Networks

This might surprise you: Some recent advancements suggest we might not even need normalization at all.

In architectures like DeepNorm and Fixup, researchers have found ways to design deep networks that don’t require BN or LN for stability. Instead, they rely on:

- Careful weight initialization

- Skip connections and residual scaling

- Adaptive gradient clipping

While these approaches aren’t mainstream yet, they hint at a future where we might not need normalization layers at all.

👉 Final Thought: BN and LN are essential today, but deep learning is evolving fast. Who knows? Maybe in a few years, we’ll be discussing normalization-free architectures instead.

Final Takeaways

- BN is best for CNNs and large batch sizes

- LN is the go-to for NLP, Transformers, and RNNs

- For small-batch vision tasks, Group Norm is worth considering

- Instance Norm is ideal for style-based models

- The future might not need normalization at all!

I’ve personally seen cases where choosing the wrong normalization method ruined model performance, so hopefully, this guide helps you make the right call the first time. 🚀

7. Conclusion

By now, you should have a solid understanding of Batch Normalization (BN) vs. Layer Normalization (LN)—not just the theory but also the real-world trade-offs. Let’s wrap things up with the key takeaways and a look at where normalization techniques are heading.

Key Takeaways: When & Why to Use BN vs. LN

If there’s one thing I’ve learned from experimenting with BN and LN across different models, it’s this: normalization is not one-size-fits-all. Here’s a quick recap to help you make the right choice:

✅ Choose Batch Normalization (BN) when:

✔ You’re working with CNNs or vision models (ResNet, EfficientNet, etc.)

✔ Your batch sizes are large and stable

✔ You need to train faster with higher learning rates

✔ You want implicit regularization without dropout

✅ Choose Layer Normalization (LN) when:

✔ You’re dealing with NLP, Transformers, or RNNs (BERT, GPT, LSTMs)

✔ Your batch sizes are small or variable

✔ You need batch-independent training, like in reinforcement learning

✔ You’re working with online learning or streaming data

TL;DR: BN is your go-to for vision, LN is king in NLP.

Final Thoughts: Choosing Normalization Strategically

Here’s my advice: Don’t just use BN or LN because it’s the default. Take a step back and think:

👉 What’s my architecture? (CNN? Transformer? RNN?)

👉 What’s my batch size? (Large? Small? Dynamic?)

👉 Am I working with vision or NLP? (BN → Vision, LN → NLP)

👉 Do I need batch-independent training? (If yes, LN wins)

Choosing the right normalization technique can mean the difference between a model that barely trains and one that reaches SOTA performance. I’ve made mistakes in the past by blindly following BN for everything—don’t make the same mistake.

At the end of the day, normalization is just a tool. The real magic comes from knowing when and how to use it effectively.

I’m a Data Scientist.