1. Introduction

“Your eyes say more than your words ever could.” – That’s not just a poetic thought; it’s a reality that technology is now learning to interpret.

Eye tracking has fascinated me for years, especially with its applications in AR/VR, gaming, marketing, and even assistive technology. The idea that a system can analyze where you’re looking, track your gaze movements, and even predict intent—it’s both exciting and powerful.

When I first started working on eye tracking with OpenCV, I quickly realized that it’s more than just detecting eyes in a frame. You need to understand facial landmarks, extract eye regions accurately, track pupil movements, and optimize everything for real-time performance.

In this guide, I’ll take you step by step through the process of building a real-time eye-tracking system using OpenCV and dlib. By the end, you’ll have a working gaze tracking system that can detect and follow eye movement in real time using your webcam.

Before we dive in, here’s what you need to know:

✔ Skill level: If you’re comfortable with Python and OpenCV basics, you’re good to go.

✔ What you’ll learn: How to detect eyes, track gaze, and even detect blinks using OpenCV.

✔ What you’ll build: A real-time eye tracker that works with a webcam.

Let’s get started.

2. Setting Up the Environment

Before we can track eyes, we need to get the right tools in place. I’ve worked with multiple eye-tracking implementations, and trust me—setting up the environment correctly saves you hours of debugging later.

Installing Dependencies

You’ll need the following libraries:

- OpenCV – for image processing.

- dlib – for facial landmark detection.

- NumPy – for handling arrays.

- imutils – for simple image transformations.

To install everything, just run:

pip install opencv-python dlib numpy imutilsNow, here’s where things get tricky—dlib installation. If you’re on Windows, you might run into build errors because dlib requires CMake and Visual Studio Build Tools. I’ve personally dealt with this headache, so here’s a quick fix:

Fix for dlib Installation on Windows

- First, install CMake:

- Download it from cmake.org.

- Make sure to check “Add CMake to system PATH” during installation.

- Install Visual Studio Build Tools (if not already installed):

- Download from Microsoft.

- During installation, select “C++ build tools“.

- Finally, try reinstalling dlib:

pip install dlibIf you’re on Linux or Mac, it’s much simpler. Just install dlib normally, and you’re good to go.

CPU vs. GPU for Eye Tracking

You might be wondering—should I use a GPU for real-time eye tracking?

Here’s my take:

✔ For casual use & prototyping: A modern CPU is good enough. OpenCV is highly optimized for CPU performance.

✔ For large-scale applications (VR, gaming, robotics): A GPU can speed up face detection, especially if you’re using deep learning-based tracking.

Now that we’ve set up everything, it’s time to dive into the actual tracking process—starting with detecting the face and identifying key facial landmarks. Let’s move forward.

3. Understanding the Eye Tracking Process

“The eyes are the windows to the soul.” – or, in our case, the perfect markers for tracking human behavior.

When I first started working on eye tracking, I thought it would be as simple as detecting the eyes and following their movement. But I quickly learned that accurate eye tracking isn’t just about detecting eyes—it’s about understanding the entire facial structure.

Breaking Down the Eye Tracking Pipeline

Eye tracking involves four key steps, and skipping even one can throw off your results:

- Face Detection – First, we need to find the face in the frame.

- Landmark Detection – Next, we identify key facial points, specifically around the eyes.

- Eye Region Extraction – We then isolate the eyes for more precise tracking.

- Eye Movement Tracking – Finally, we analyze the eye movement and determine gaze direction.

You might be wondering: Why not just detect the eyes directly instead of going through all these steps?

Well, here’s what I learned the hard way—eye detection alone is unreliable. If a person tilts their head slightly or the lighting changes, direct eye detection can fail. That’s why we use facial landmarks—they provide a stable reference for detecting eyes, no matter how the face moves.

Why dlib’s 68 Facial Landmarks?

After experimenting with multiple approaches, I found that dlib’s 68-point landmark model is one of the most reliable methods for eye tracking.

Here’s why:

✔ It’s robust—works across different lighting conditions and face angles.

✔ It’s lightweight—runs efficiently on CPU without requiring heavy deep-learning models.

✔ It gives us precise landmark points around the eyes, making tracking much smoother.

Now, let’s talk about the exact landmark points used for eye tracking.

Key Landmark Points for Eye Tracking

dlib assigns 68 unique points to a face, but for eye tracking, we only care about 12 of them:

- Left Eye (Points 36-41)

- Right Eye (Points 42-47)

Here’s a quick visualization of how they are mapped:

36-----37----38----39

| |

41-----40----47----42

| |

46-----45----44----43These points help us define the shape of each eye, allowing us to extract and analyze them separately.

For example, if we want to extract the left eye region, we simply take:

left_eye = landmarks[36:42] Similarly, for the right eye:

right_eye = landmarks[42:48] Now that we’ve mapped out the eyes, the next step is extracting and processing them for real-time tracking. That’s where things get interesting—because tracking pupils in motion isn’t as straightforward as you’d think. Let’s dive into that next.

4. Face Detection and Landmark Detection

“Seeing is believing” — but in computer vision, detecting is believing.

When I first tried implementing eye tracking, I underestimated how crucial reliable face detection is. Poor detection can throw off everything downstream — your landmarks won’t align properly, and your gaze tracking will be all over the place.

After experimenting with various approaches, I found that dlib’s face detector offers a great balance between speed and accuracy. Depending on your needs, you can choose between two powerful options:

- HOG-based Detector — Lightweight and fast; perfect for CPU-based real-time tracking.

- CNN-based Detector — More accurate but heavier; better suited for GPU-based systems or when precision matters most.

For real-time eye tracking on a standard webcam, I’ve had great results using the HOG-based detector — it’s fast enough to keep things smooth without compromising accuracy.

Step 1: Loading the Face Detector and Landmark Predictor

Here’s how I usually set things up in my projects:

import cv2

import dlib

# Load dlib's face detector (HOG-based)

detector = dlib.get_frontal_face_detector()

# Load the 68-point facial landmark predictor

predictor = dlib.shape_predictor("shape_predictor_68_face_landmarks.dat")Pro Tip: If you’re new to this, that .dat file is critical — it’s the pre-trained model for detecting those 68 facial landmarks.

Step 2: Capturing Frames and Detecting Faces

Next, let’s capture video from the webcam and detect faces:

cap = cv2.VideoCapture(0)

while True:

ret, frame = cap.read()

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

# Detect faces

faces = detector(gray)

for face in faces:

landmarks = predictor(gray, face)

# Draw eye landmarks

for n in range(36, 48): # Eye region points

x, y = landmarks.part(n).x, landmarks.part(n).y

cv2.circle(frame, (x, y), 2, (255, 0, 0), -1)

cv2.imshow("Eye Tracking", frame)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

cap.release()

cv2.destroyAllWindows()Step 3: Common Errors and Troubleshooting

This is where I hit my first roadblock — and if you’ve tried this before, you’ve probably seen this error too:

RuntimeError: Unable to open shape_predictor_68_face_landmarks.datI remember scratching my head for a while before realizing the issue — the .dat file wasn’t in the correct directory.

Here’s how you fix it:

- Download the

.datfile from dlib’s official model page. - Extract it and place it in your working directory.

- If you’re using a different path, be sure to specify it like this:

predictor = dlib.shape_predictor("path/to/shape_predictor_68_face_landmarks.dat")Pro Tip:

When working with multiple faces, dlib’s detector will return all detected faces as rectangles. From my experience, it’s a good idea to sort them by size (largest first) since the closest face is often the one you care about most. Here’s a quick way to sort detected faces:

faces = sorted(faces, key=lambda face: face.width() * face.height(), reverse=True)This trick has saved me countless headaches when working on projects that involve multiple subjects in frame.

With the face and eye landmarks in place, we’re now ready to move on to the real challenge — isolating the eye regions and tracking pupil movement.

Let’s jump right in.

5. Isolating and Extracting Eye Regions

“The eyes are the window to the soul.” But in computer vision, they’re just another region of interest (ROI) that needs careful extraction.

I remember the first time I tried eye tracking—my biggest mistake was assuming that detecting the face was enough. Turns out, eye tracking demands precision, and even slight misalignment can throw off results completely.

Let’s break it down step by step.

Step 1: Extracting Eye Landmarks

In dlib’s 68-point facial landmark model, the eyes are defined by these indices:

- Left Eye: Points

36-41 - Right Eye: Points

42-47

Here’s how I usually extract them:

left_eye = landmarks.parts()[36:42] # Left eye landmarks

right_eye = landmarks.parts()[42:48] # Right eye landmarksThese landmarks define the contours of the eyes. But raw landmark points aren’t enough—we need to crop out the exact eye region for further processing.

Step 2: Cropping the Eye Region

Here’s a simple way to extract the bounding box around each eye:

def crop_eye_region(landmarks, eye_indices, frame):

eye_points = [landmarks.part(n) for n in eye_indices]

x_min = min(point.x for point in eye_points)

y_min = min(point.y for point in eye_points)

x_max = max(point.x for point in eye_points)

y_max = max(point.y for point in eye_points)

return frame[y_min:y_max, x_min:x_max]

left_eye_region = crop_eye_region(landmarks, range(36, 42), frame)

right_eye_region = crop_eye_region(landmarks, range(42, 48), frame)This function dynamically crops each eye based on landmark positions.

Step 3: Handling Variations in Eye Shapes & Lighting

Here’s where things get tricky.

- Eye shapes differ from person to person—some people naturally squint, others open their eyes wide. If you’re working with multiple users, your tracking must handle eye aspect ratio (EAR) changes dynamically.

- Lighting conditions are unpredictable. Shadows, reflections, and low light can distort the extracted eye region. I’ve found that applying adaptive histogram equalization (CLAHE) helps standardize contrast:

import cv2

clahe = cv2.createCLAHE(clipLimit=2.0, tileGridSize=(8,8))

gray_eye = cv2.cvtColor(left_eye_region, cv2.COLOR_BGR2GRAY)

equalized_eye = clahe.apply(gray_eye)This simple tweak significantly improves robustness in low-light conditions.

6. Eye Pupil Detection & Tracking

Now that we have the eye region isolated, it’s time to find the pupil. This is where things get fun.

You might be wondering: Why not just use the darkest region of the eye?

In theory, that should work. But in reality, eyelashes, shadows, and reflections can interfere. I’ve tried several methods, but the most reliable one is binary thresholding + contour detection.

Step 1: Convert to Grayscale & Apply Thresholding

The first step is to binarize the eye region, making the pupil stand out:

gray_eye = cv2.cvtColor(left_eye_region, cv2.COLOR_BGR2GRAY)

_, threshold = cv2.threshold(gray_eye, 30, 255, cv2.THRESH_BINARY_INV)cv2.THRESH_BINARY_INV inverts the colors, making the pupil white and the background black.

The threshold value 30 works well in most cases, but you can tweak it dynamically using adaptive thresholding:

threshold = cv2.adaptiveThreshold(gray_eye, 255, cv2.ADAPTIVE_THRESH_GAUSSIAN_C, cv2.THRESH_BINARY_INV, 11, 2)Step 2: Detecting the Pupil with Contours

Once the pupil is highlighted, we can detect it as a contour:

contours, _ = cv2.findContours(threshold, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

contours = sorted(contours, key=lambda x: cv2.contourArea(x), reverse=True)cv2.RETR_EXTERNALensures we only detect outermost contours.- Sorting contours by area helps in selecting the largest one—this is usually the pupil.

Step 3: Tracking Pupil Movement

Now, let’s find the center of the pupil and track it:

if contours:

(x, y, w, h) = cv2.boundingRect(contours[0])

pupil_center = (x + w // 2, y + h // 2)

cv2.circle(left_eye_region, pupil_center, 2, (0, 255, 0), -1)This small green dot represents the pupil position, which we can now track frame-by-frame.

Pro Tip: Handling Edge Cases

Eye tracking fails when:

✅ The pupil is too close to the eyelid → Try adjusting the threshold dynamically.

✅ Glasses reflect too much light → Use polarized lighting or preprocess frames with glare reduction filters.

✅ The pupil is off-frame → Implement face re-detection every few frames to avoid drift.

7. Calculating Eye Movement (Gaze Tracking)

“The eyes may be the windows to the soul, but in eye tracking, they’re just a set of moving coordinates.”

When I first started with gaze tracking, I assumed it would be as simple as detecting the pupil and mapping its position.

Turns out, eye movement tracking is much more nuanced—simply detecting the pupil isn’t enough. You have to account for blinking, noise, and slight head movements that can throw off results.

Let’s break down how we can track gaze direction and detect blinks with a mathematical approach.

Step 1: Centroid Tracking for Gaze Direction

Once we’ve detected the pupil (from the previous step), we can track its position relative to the eye region. The idea is simple:

- If the pupil is towards the left side, the user is likely looking left.

- If it’s centered, they’re looking straight ahead.

- If it’s towards the right side, they’re looking right.

Here’s how I do it in code:

eye_center_x = eye_region.shape[1] // 2 # Middle of the eye region

pupil_x = pupil_center[0]

if pupil_x < eye_center_x - threshold:

gaze_direction = "Left"

elif pupil_x > eye_center_x + threshold:

gaze_direction = "Right"

else:

gaze_direction = "Center"This method is lightweight and real-time friendly, but it has its limitations—it assumes the head remains relatively still. For head movement compensation, you’d need a more advanced approach like calibrating with screen coordinates.

Step 2: Eye Aspect Ratio (EAR) for Blink Detection

Now, let’s talk about blinking—a crucial component of eye tracking. One of the best ways to detect blinks is through the Eye Aspect Ratio (EAR).

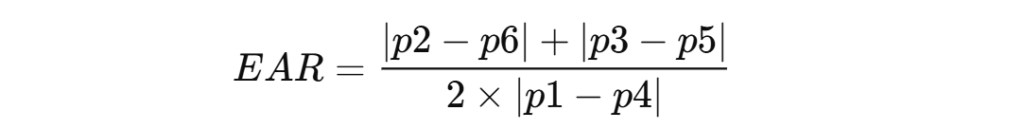

The formula for EAR is:

This measures how “open” the eye is. When the EAR drops below a threshold, it indicates a blink.

Here’s the implementation:

def calculate_ear(eye):

A = np.linalg.norm(eye[1] - eye[5])

B = np.linalg.norm(eye[2] - eye[4])

C = np.linalg.norm(eye[0] - eye[3])

EAR = (A + B) / (2.0 * C)

return EARI’ve found that an EAR threshold of 0.2 works well for detecting blinks, but this varies slightly based on the individual and camera angle.

Step 3: Detecting Blinks in Real-Time

Now, let’s implement blink detection in a loop:

EAR_THRESHOLD = 0.2

CONSECUTIVE_FRAMES = 3 # Number of frames the eye must be closed

blink_counter = 0

total_blinks = 0

ear = calculate_ear(left_eye)

if ear < EAR_THRESHOLD:

blink_counter += 1

else:

if blink_counter >= CONSECUTIVE_FRAMES:

total_blinks += 1

blink_counter = 0This prevents false positives by ensuring the eye remains closed for a few consecutive frames before counting a blink.

8. Real-Time Eye Tracking Implementation

At this point, we have:

✅ Pupil tracking (for gaze direction)

✅ Blink detection (for eye state analysis)

Now, let’s talk about real-time performance—because even the most accurate eye-tracking system is useless if it lags.

Step 1: Optimize with Region of Interest (ROI) Processing

Early on, I made a rookie mistake—I was processing the entire frame for every eye tracking step. This is a huge waste of computation. Instead, always crop the eye region first:

roi = frame[y_min:y_max, x_min:x_max]By only processing this smaller ROI, you can reduce the computational load by over 50%.

Step 2: Multithreading for Smoother Performance

If you’re working with real-time applications, OpenCV alone isn’t enough. You need to offload computations using multithreading.

Here’s how I run face detection and eye tracking in parallel:

from threading import Thread

def process_frame():

while True:

ret, frame = cap.read()

# Face & eye tracking code here

thread = Thread(target=process_frame)

thread.start()This way, the tracking doesn’t block the main application loop, making performance significantly smoother.

Conclusion & Next Steps

Building an eye-tracking system with OpenCV is a rewarding project, and if you’ve followed along this far, you’ve built something that’s both practical and powerful.

We covered everything from face detection and landmark extraction to pupil tracking, gaze detection, and real-time optimization. While this system is effective, there’s always room for improvement.

Potential Enhancements

From my own experience, here are a few ways you can take this project even further:

✅ Deep Learning Models for Improved Accuracy: While dlib’s 68-point model is reliable, CNN-based models (like OpenCV’s DNN module or MediaPipe) can offer better robustness, especially under challenging lighting conditions or head movements.

✅ Multi-Camera Setups: If you’re serious about precision, consider adding multiple camera angles. This helps compensate for occlusions and improves tracking accuracy.

✅ Calibrated Gaze Mapping: For applications like screen control or gaming, mapping gaze coordinates to precise screen positions can unlock exciting possibilities.

Your Turn to Experiment

I’d strongly encourage you to experiment with different techniques—adjust EAR thresholds, try different contour methods for pupil detection, or even explore infrared cameras for low-light tracking.

If you run into issues, remember that troubleshooting is part of the learning process. I’ve spent countless hours tweaking parameters and testing conditions to improve my own models.

Feel free to share your results, insights, or improvements—I’d love to hear how your eye-tracking system evolves. After all, the best ideas often come from collaboration.

I’m a Data Scientist.